AI Workload Simulation with C++ and TLM 2.0

System-level simulation demonstrating the impact of hardware optimization on AI workload (matrix multiplication).

Project Overview

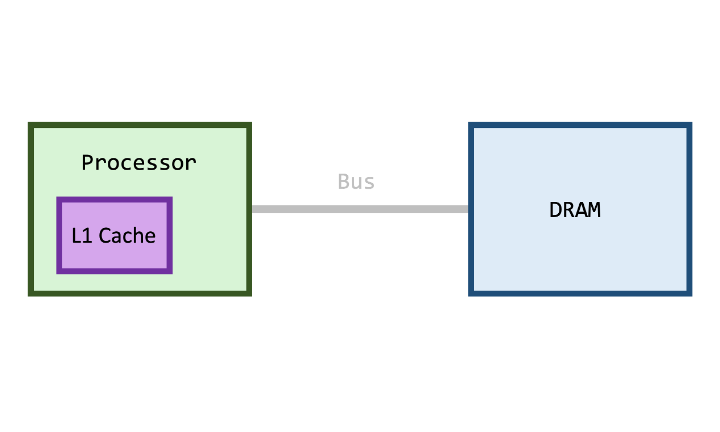

This project demonstrates a system-level simulation that explores the critical impact of hardware optimization on AI workloads. By implementing a cache simulation using C++ and TLM 2.0, this work showcases how architectural decisions can significantly affect performance in AI applications.

Key Features

- SystemC TLM 2.0 Implementation: Leveraged SystemC’s Transaction Level Modeling for accurate system simulation

- Cache Performance Analysis: Demonstrated the impact of different cache configurations on matrix multiplication performance

- Performance Optimization: Achieved significant speedup through intelligent cache design and memory access patterns

- AI Workload Simulation: Applied real-world AI workload characteristics to demonstrate practical performance implications

Technical Implementation

The project utilizes advanced C++ features and SystemC’s TLM 2.0 framework to create a realistic simulation environment. The cache simulation accurately models memory hierarchy behavior, allowing for detailed performance analysis of AI workloads.

Results

Through systematic analysis and optimization, the project successfully identified performance bottlenecks in AI workload execution and demonstrated how proper cache design can lead to substantial performance improvements. The simulation provides valuable insights into system-level optimization strategies for AI applications.

Skills Demonstrated

- C++ Programming: Advanced C++ features and object-oriented design

- SystemC TLM 2.0: Transaction Level Modeling for system simulation

- Performance Optimization: Cache design and memory access optimization

- System Architecture: Understanding of memory hierarchy and its impact on performance

- AI Workload Analysis: Application of performance analysis to AI-specific workloads

Repository

The complete implementation, including source code, documentation, and performance analysis, is available in the GitHub repository.